Disclaimer: I made this experiment out of curiosity and not academia. I’vent double checked the results and I have used arbitrary-feel-good-in-my-guts constants when running the tests.

In the last post I built a classifier from subtitles of movies that had failed and passed the Bechdel test. I used a dataset with about 2400 movie subtitles labeled whether or not they had passed the Bechdel test. The list of labels was obtained from bechdeltest.com.

In this post I will explore the inner workings of the classifier. What words and phrases reveal if a movie will pass or fail?

Lets just quickly recap what the Bechdel test is, it tests a movie for

- The movie has to have at least two women in it,

- who talk to each other,

- about something besides a man.

Keywords from subtitles

It’s possible to extract keywords from classifiers. Keywords are discriminating indicators (words, phrases) for a specific class (passed or failed). There are many ways to weight them. I let the classifier sort every keyword according to the probability of belonging to a class.

Common, strong, keywords

To get a general overview we can disregard the most extreme keywords and instead consider keywords that appears more frequently. I extracted keywords that had occurred at least in 100 different movies (which is about 5% of the entire dataset).

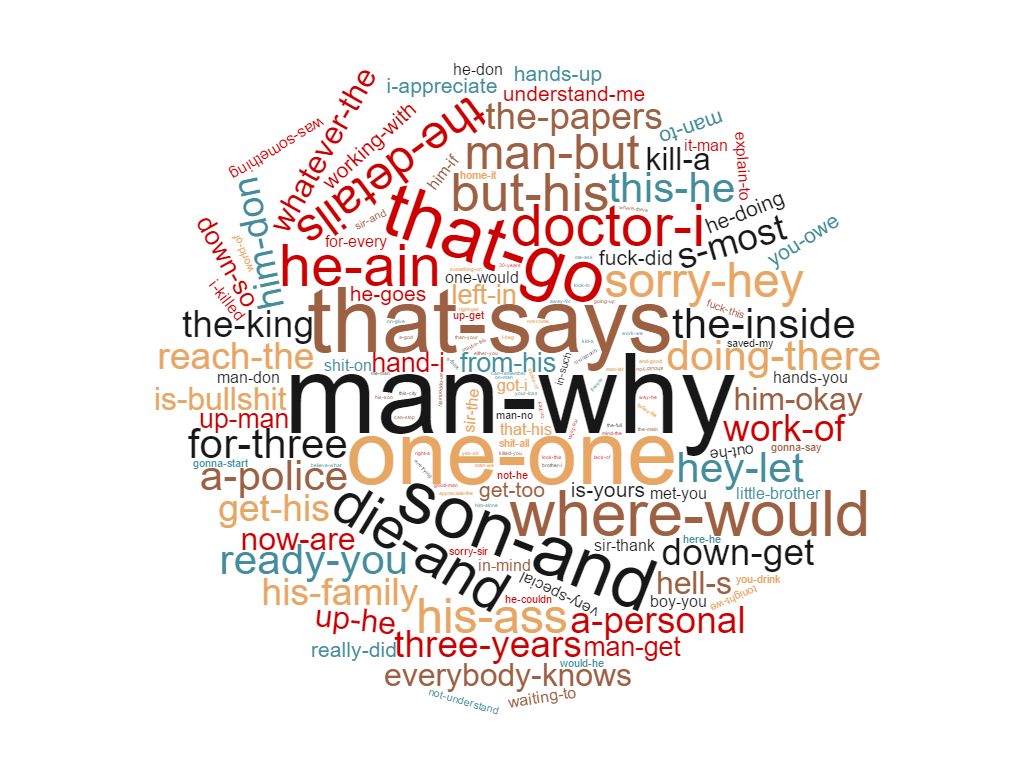

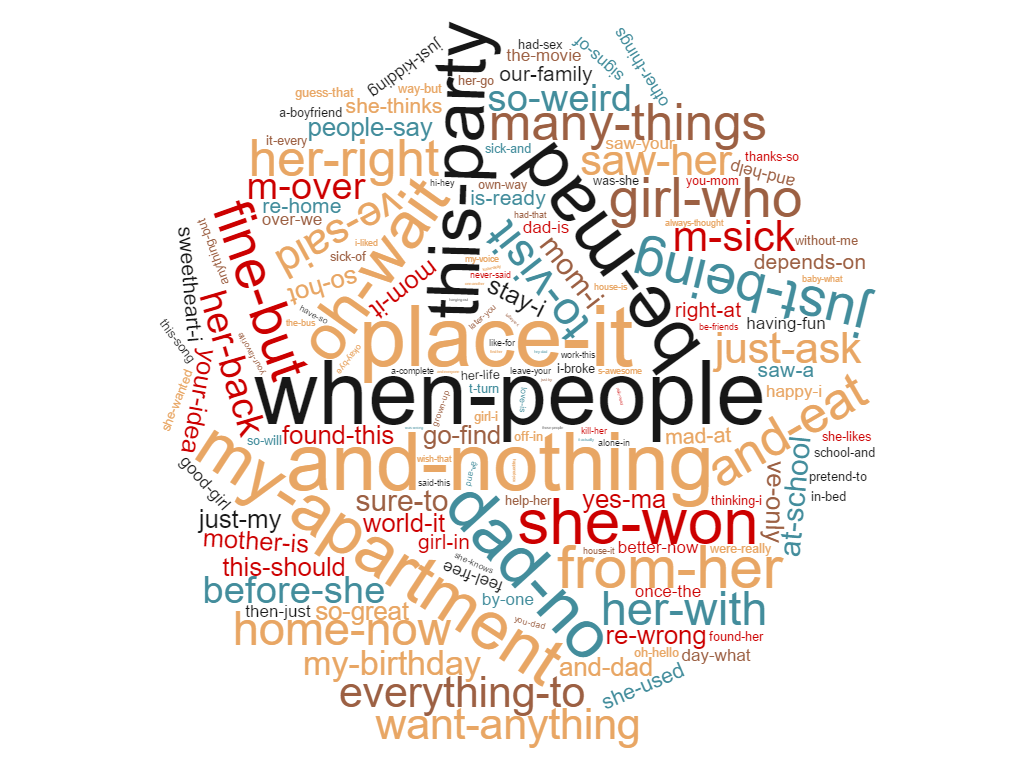

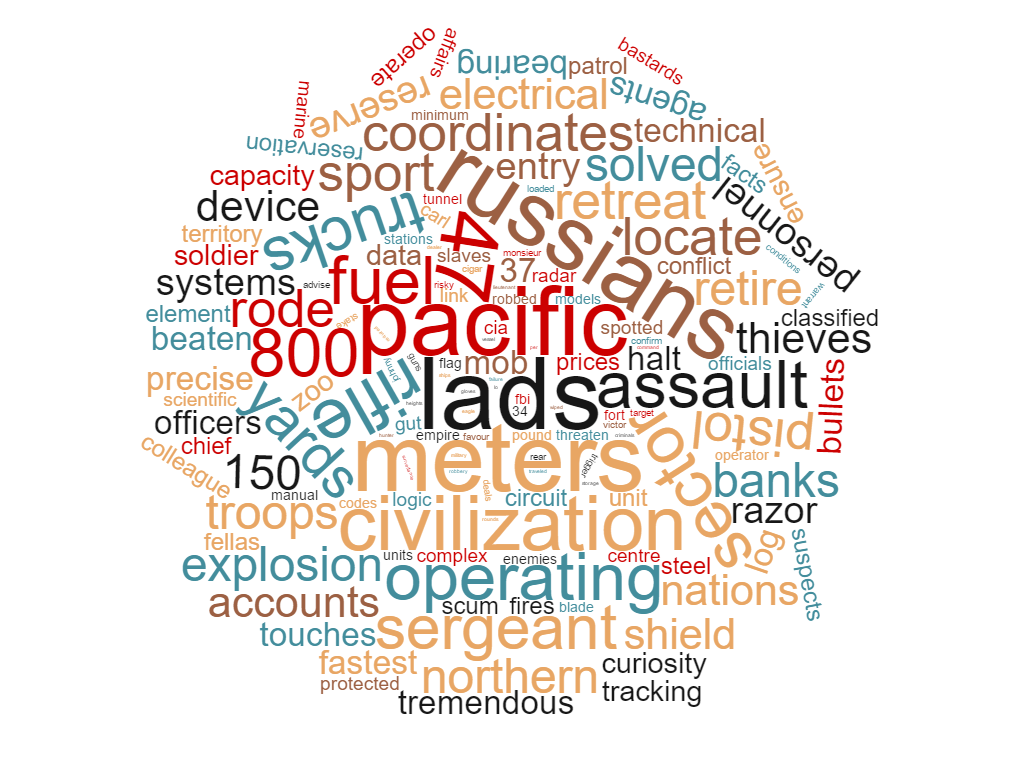

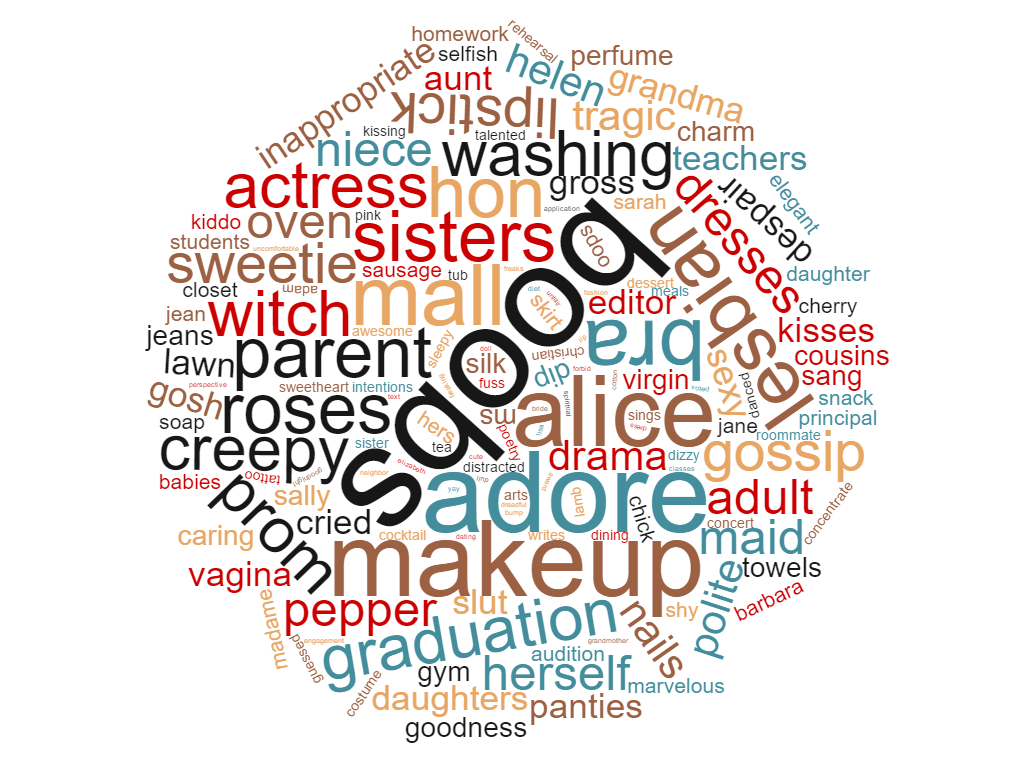

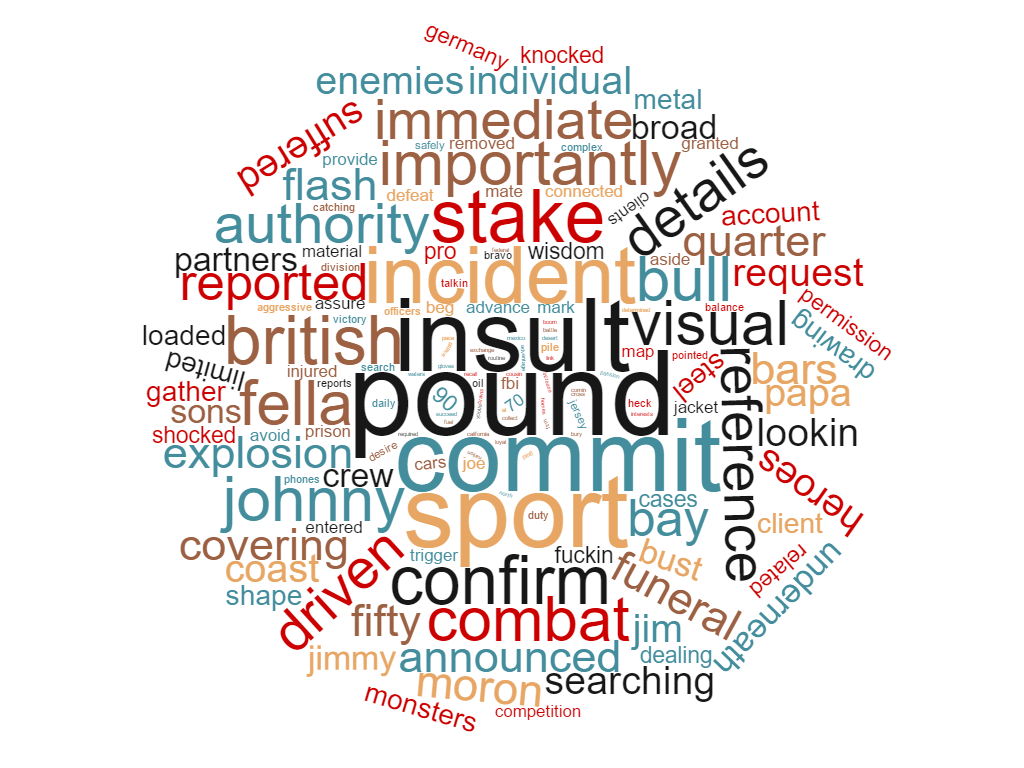

To start with I looked at unigrams (single words) and removed non alphanumerical characters and transformed the text to lower case. To visualize the result I created two word clouds. One with keywords that indicate a failed test. One with keywords that are discriminative for a passed test.

Bigger words means higher probability of either failing or passing.

Keywords like ‘lads’, ‘assault’, ‘rifle’, ’47’ (ak-47), and ‘russian’ seems to indicate a failed Bechdel test. Also words like ‘logic’, ‘solved’, ‘systems’, ‘capacity’ and ‘civilization’ are indicators of a failed Bechdel test.

The word ‘boobs’ appears a lot more in subtitles of movies that passed the Bechdel tests than those which failed. I don’t know why, but I’ve double checked it. Overall it’s a lot of ‘lipstick’, ‘babies’, ‘washing’, ‘dresses’ and so on.

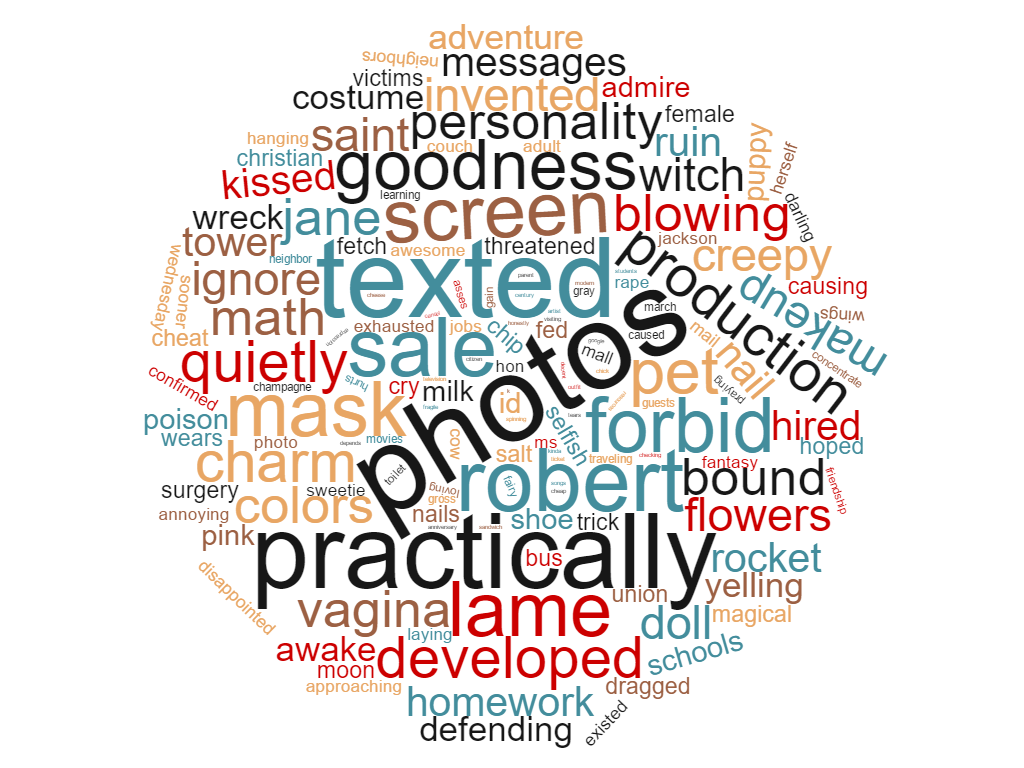

Keywords only from 2014 and 2015, did anything change?

The word clouds above are generated from 1892 up until now. So I wanted to check if anything had changed since. Below are two word clouds from 2014 and 2015 only. There were less training data (97 and 142 movies) and I only looked at words that appeared in 20 or more titles to avoid extreme features.

Looking at the recent failed word cloud it seems like there are less lads, explosions and ak-47s. Also, Russia isn’t as scary anymore, goodbye the 80s. In general it’s less of the war stuff?

From a quick glance it seems like something is different in the passed cloud too, we find words like ‘math’, ‘invented’, ‘developed’, ‘adventure’ and ‘robert’. Wait what Robert? So it seems like ‘Robert’ occurs in 20 movies that passed and 3 that failed last two years. Robert is probably noise (too small dataset). Furthermore, words like ‘washing’, ‘mall’, ‘slut’ and ‘shopping’ have been neutralized. Interestingly a new modern keyword ‘texted’ is used a lot in movies that passed the Bechdel test.

From a very informal point of view, it looks like we are moving in the right direction. But I think for a better understanding of how language has changed over time with a Bechdel -perspective it’s necessary to set up a more controlled experiment. One where you can follow keywords over time as they gain and lose usage. Like google trends, please feel free to explore it and let me know what you find out 😉

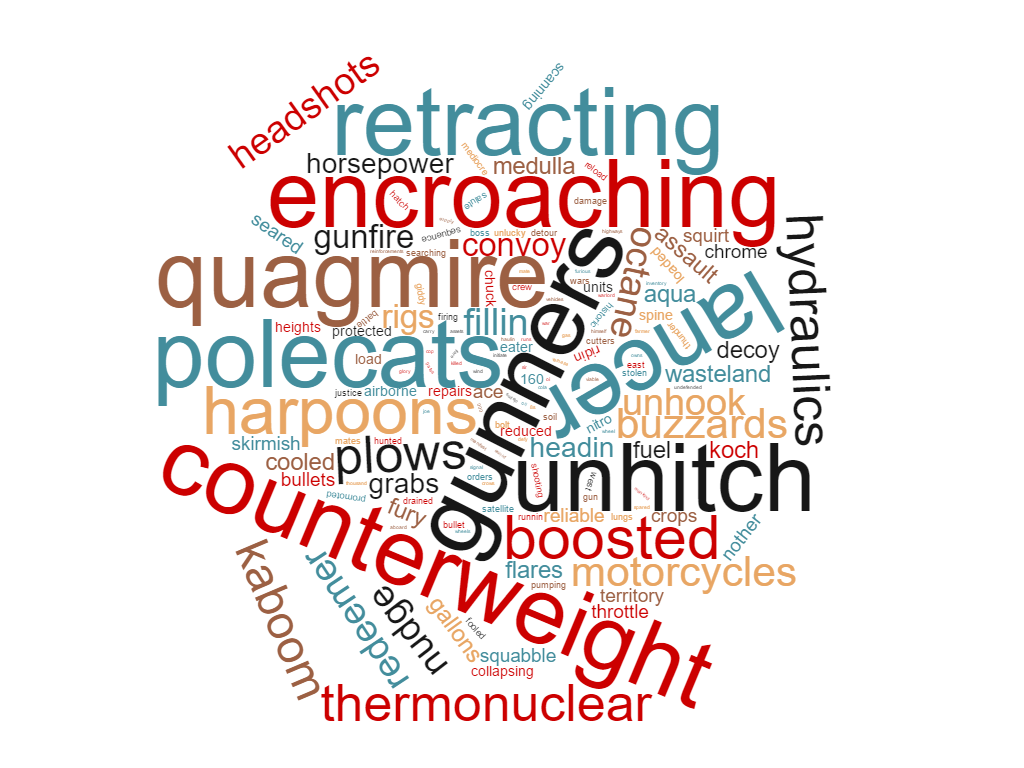

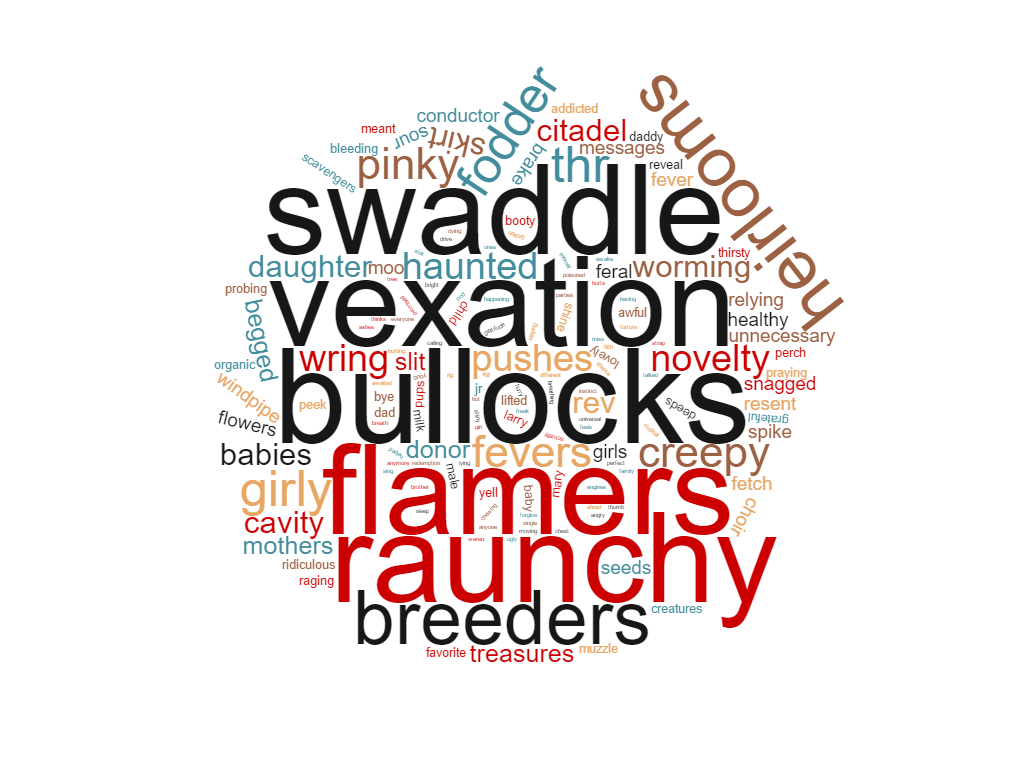

Looking at a recent movie, Mad Max: Fury Road

I decided to examine the subtitles in a recent movie that had passed the test, Mad Max: Fury Road. Todo this I trained a classifier with all subtitles since 1892, except the ones from Mad Max movies. Then extracted the keywords from the Mad Max: Fury Road subtitles.

This movie passes the Bechdel test. An interesting point is that despite the anecdotic presence word such as ‘babies’, ‘girly’ and ‘flowers’ (in the passed class) the words that surface are not linked to traditional femininity -unlike many other movies that have passed the test. Overall it’s much harder to differentiate between the two clouds.

If you haven’t seen it yet go and watch it, it’s very good!

Conclusion

If my experiment is carried out correctly, or at least good enough (read disclaimer at the top:) passing the Bechdel test doesn’t imply a gender equal movie. Even if it certifies the movie has…

- At least two woman

- that speak to each other

- about something else than men …

…unfortunately this ‘something else than men’ often seems to be something linked to ‘traditional femininity’. The good news, when only looking at more recent data the trend seems to be getting more neutral, ‘washing’ is falling down on the list while ‘adventure’ rises.

It would be interesting to come up with a test that also captures the content as well as how women (and others) are represented. Designing the perfect test will probably be infinitely hard, especially for us humans. It seems like we have hard times on settling whether or not any movie is gender equal (just google any movie discussions). Perhaps with enough data, machine learning can design a test that reveal a multidimensional score of how well and why a movie passes or fails certain tests, not only examining gender but looking at all kinds of diversities.

Finally, just for the sake of clarity, I don’t think the Bechdel test is bad, it certainly helps us to think about women’s representation in movies. But maybe don’t always expect a non sexist, gender equal movie just because it passes the Bechdel test.

Credits

Much kudos to bechdeltest.com for maintaining their database. Thanks to omdbapi.com for a simple to use API. The wordclouds were generated by wordclouds.com

Appendix

For bigrams I also removed non alphanumeric characters, that is why you can see some weird stuff like ‘you-don’ which should be ‘you-don’t’. However I decided to keep this because it can capture some interesting features like ‘s-fault’ (e.g. ‘dad’s fault’)

Space has been replaced by ‘-‘ so the word cloud word make sense.

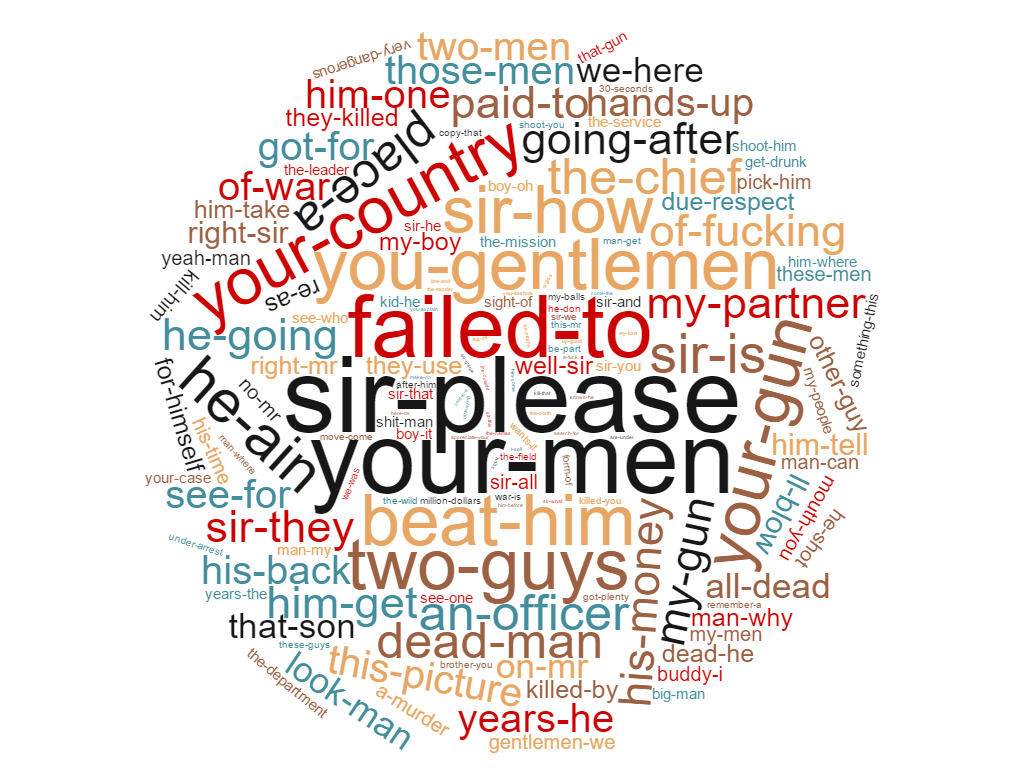

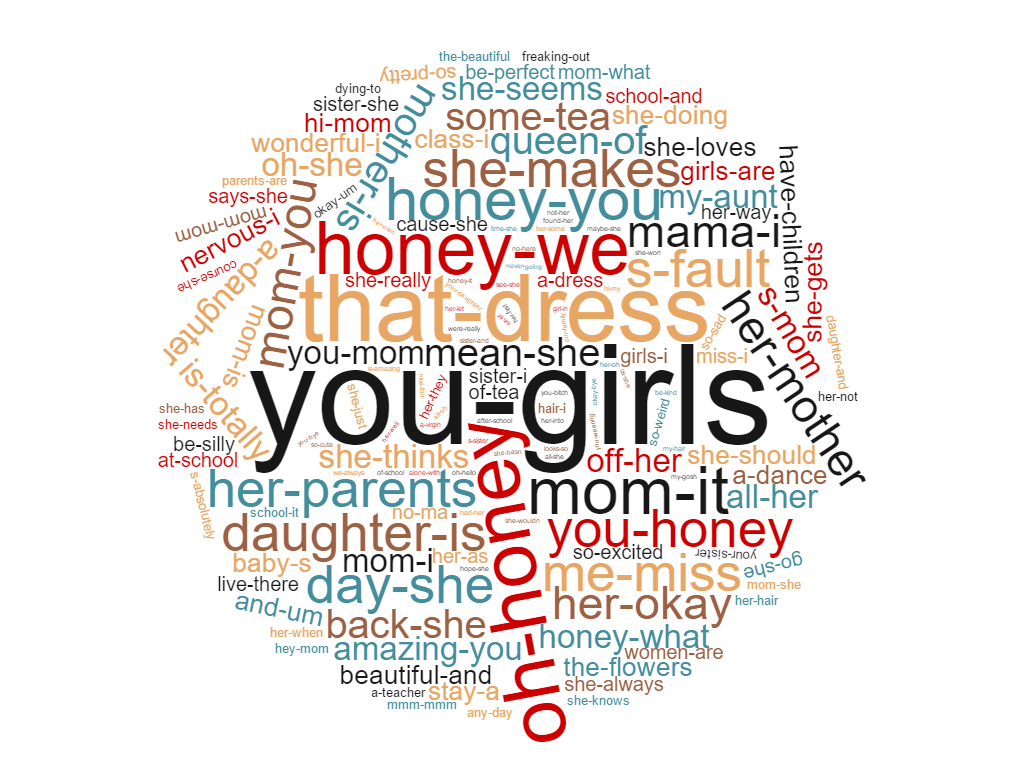

All time bigram keywords

One interesting thing here is the ‘your men’ vs ‘you girls’. I will leave the analysis to you 😉

2014 and 2015 bigram keywords